Learning to collaborate

Robots building together

06.18.2018

INTRODUCTION

In the future, an architect should be able to design a building and send the 3D model to a group of mobile robots that self-organize to build directly from that model. The robots would work together to build the physical manifestation of the digital model, responding to changes in the environment, updates to the model, or surprises in the material. In this thesis, I argue that collaboration is the missing ingredient in current multi-robot practices. If we are to push the boundaries of robotic assembly, then we need to make collaborative robots that can work together, without human intervention, to build large structures that they could not complete alone. As a first step, I developed a group of agents that learn to collaborate and work together to move blocks. In the following sections, I give an overview of my thesis research–outlining three models of interaction and presenting my approach to collaborative robots.

Models of INTERACTION

Before building multiagent systems, I looked to behavioral and social sciences to understand how people interact and what motivates them through their interactions. In this section, I outline cooperation, coordination, and collaboration. They each vary on multiple axes, such as level of autonomy maintained by each participant, formal structure that guides their interaction, and whether the participants are working towards shared or individual goals.

In cooperation, individuals work towards their own goals and maintain their autonomy without any formal set of rules to guide their interaction. Through coordination, individuals lose a significant amount of autonomy as they must follow a fixed set of formal rules to achieve shared goals. Lastly, in collaboration, individuals come to the table without any set structure and collectively define their own rules and the level of autonomy to maintain. They have both shared and individual goals, and most importantly, they achieve more together than they could alone.

Looking closer at collaboration, it is “an emergent process” that evolves over time (Gray, 1989). It is composed of three main phases: preconditions, process, and outcomes. The preconditions are the factors that make collaboration possible. These are the motivations that each party may have and the environmental conditions that facilitated an alliance to form. Next is the process by which collaboration happens; it is dynamic and evolving throughout the interaction. The last phase of collaboration is the outcome, which includes the metrics by which participants evaluate the success of their collaboration. In my research, I use this framework to define my own theory of robotic collaboration and evaluate the process of collaboration with these three phases.

APPROACH

I define robotic collaboration as an emergent process through which individual agents (simulated or physical), who may have different knowledge, abilities, or intelligence, interact with each other and their environment to develop new methods of interaction, allowing them to achieve more than one could alone.

Looking towards the future of robotic construction, I see groups of robots with different abilities working together to build large structures. Working towards this goal, I developed a group of agents that learn to collaborate and work together to move blocks using reinforcement learning (RL). Drawn from behavioral psychology, RL is a machine learning approach whereby agents learn an optimal behavior to achieve a specific goal by receiving rewards or penalties for good and bad behavior, respectively. RL enables me to find emergent behavioral patterns that are ideal for collaborative assembly processes.

As mentioned above, RL agents learn an optimal behavior policy by interacting with their environment through trial-and-error and receiving reward signals as feedback. More specifically, an agent exists within an environment in discrete time. At each timestep, the agent chooses an action given its current observation, and in return, the agent receives a reward and an observation from the next state.

To build collaborative agents, I took the following steps:

0. Define a toy task

1. Build initial robots and blocks to assemble

2. Develop a manually-controlled simulation environment

3. Simplify environment and adapt for RL

4. Expand to include single agent and one block

5. Expand to include multiple agents and one block

6. Prepare for simulation-to-real-world transfer

7. Move to a decentralized approach

0

DEFINE TOY TASK

First, I defined a toy task in a simplified blocks world (concept diagram below). The task requires the agents to move blocks to their specified locations and assemble a puzzle. The 2D puzzle is composed of three blocks, each unique in shape and mass, requiring different strategies for collaborative manipulation. Because manipulation is a non-trivial task, I started by giving the agents a single block to move.

1

BUILD ROBOTS AND BLOCKS

With the toy task in hand, I designed and built the first iteration of my builder robot and the lightweight blocks to assemble. Physical hardware is a priority for me because my passion is to build structures in the physical world rather than purely in simulation. The robot has an octagonal chassis, so that it has varied surfaces to push the blocks. It is 6” in diameter and is controlled by a Raspberry Pi. The robot does not have any armatures and interacts with its environment solely through pushing around blocks with its chassis.

I built a custom TouchOSC iPhone interface to control the robot and test its capabilities moving foam blocks (shown below). The blocks are made from a lightweight extruded polystyrene foam for ease of movement.

2

DEVELOP SIMULATION ENVIRONMENT

To build the first simulation environment, I used PyBox2D for the physics engine and PyGame for graphics and control. In this manually controlled environment, I tested simple actions for controlling the agent as well as tracking the correct placement of each block. The goal of the game is to control the agent to move the blocks into the proper position specified by blue dots towards the center of the screen.

3

ADAPT ENVIRONMENT FOR LEARNING

After the manual environment was complete, I adapted it for learning by using the OpenAI gym framework. With the physics and formal design of the environment and agent in place, I needed to define the observation space, action space, and reward function.

The action and observation space can either be continuous or discrete. Rewards can be discrete or shaped. For an example, let’s imagine that we are training a robotic arm to move its gripper to a specified location. If we are using reward shaping, we may choose to give the robotic agent a negative reward based on its distance to the goal position. Therefore, it would receive a smaller penalty the closer it moved to its goal. This can give the agent hints towards good behavior. In contrast, discrete rewards are typically a fixed value reward (e.g. +1, 0) for achieving some specified state or behavior. In the example of the robotic arm, this could mean receiving a reward of +1 only when it moved to the correct position, and 0 otherwise.

The main focus of this research was building and testing the custom environments as well as evaluating, selecting, and training the reinforcement learning algorithms. I started by evaluating a few influential RL algorithms: Deep Q-Network (DQN) (Mnih et al., 2013), Proximal Policy Optimization (PPO) (Schulman et al., 2017), and Deep Deterministic Policy Gradient (DDPG) (Lillicrap et al., 2015). Because the robots would be operating in the real world, I wanted the action space to be continuous. In addition, I wanted the observation space to be continuous as well as work with either low or high-dimensional data (e.g. velocity or raw pixels) because I wanted to test using raw pixels as input. DQN has proven successful with Atari games using both low and high-dimensional input data but can only use discrete actions. Both DDPG and PPO can use continuous action space, but I chose DDPG because of its proven track record with both low and high-dimensional input data.

I chose to use OpenAI’s baseline implementation of DDPG with parameter noise in order to focus on developing my environment. To begin testing, I simplified the environment to contain a single block as the agent. Its task is to move to the specified goal location and rotation (marked by the blue dot in the center of the screen) from any randomly generated starting position. For the observation, I used relative location and rotation as well as distance to the goal.

4

EXPAND TO SINGLE AGENT AND ONE BLOCK

Next, I expanded the environment to include a single agent and one block. The task is for the agent to move the block to the specified goal position within a margin of error, visualized by the blue dot in the center of the screen. The radius of the blue circle represents the margin of error. The observation included: relative location, rotation and distance of block to the goal location; global location of block’s vertices; global rotation and location of agent; relative location, rotation and distance of agent to block; and lastly a boolean representing contact between the agent and block. I used dense discrete rewards, assigning high penalties for moving away from the goal and low rewards for moving towards it.

This approach showed some initial success, in that the agent learned to approach and interact with the block. The agent typically moved the block against the wall, then attempted to get behind it. As the agent is unable to grab the block, it had some difficulty manipulating it alone–making this a perfect opportunity for collaboration!

5

EXPAND TO MULTIPLE AGENTS AND ONE BLOCK

In this phase, I expanded the environment to have two agents. The task is still for the agents to move the block to the specified location, but I increased the margin of error to improve the success rate. For initial prototyping, I used a centrally controlled approach where all agents are controlled by the same DDPG model, sharing observations and rewards. I began testing reward shaping rather than using discrete rewards. Now, the reward is based on the distance between the agent and the block and the distance between the block and the goal position. In addition, there is a reward based on the change in distance for each overall distance. This reward is intended to motivate the agent towards bigger moves.

This video shows the agents learning over time. In the early phases of training, the agents do not interact with each other or the block. As the training progresses, they begin to learn methods for moving and manipulating the block together.

6

PREPARE FOR SIMULATION-TO-REAL-WORLD TRANSFER

Once I had initial success in simulation, I needed to prepare the environment for sim2real transfer. An overhead camera provides the robot with observational data. To make sure the observation in simulation matched the real world, I updated the screen aspect ratio to match the overhead camera and unitized the dimensions. In the previous simulations, the robots can move freely in the x and y dimensions and rotate, similar to a rolling chair. This is called holonomic control, which is when the degrees of freedom match the degrees of motion. The physical robots that I built have non-holonomic control, similar to a car, where it can move forward and backward and adjust the turning radius. In my updated simulation, I adjusted the agent’s control to be non-holonomic, so its actions controlled the linear velocity and turning angle.

With this new form of control, I found that the agents had a much harder time finding the block and successfully moving it to the goal. The new limitations of control made it more difficult for the agent to randomly explore the state space. In order to help the agent, I simplified the environment by having the agent always start randomly in the left third of the screen, the block to appear in the middle, and the goal on the right. In addition, I adjusted the margin of error of the goal location over time. At the beginning of training, the margin of error is large to give the agent early signals of success, over time it becomes smaller so that the agent would learn to be more precise.

To visualize what the agents see, I designed a rendering setup that only displayed points on the screen, their orientation, and relative distances between them. The video shows excerpts from training over time. In order for the agents to find any success, it was necessary to simplify the environment so that the agents were always facing the block and the goal.

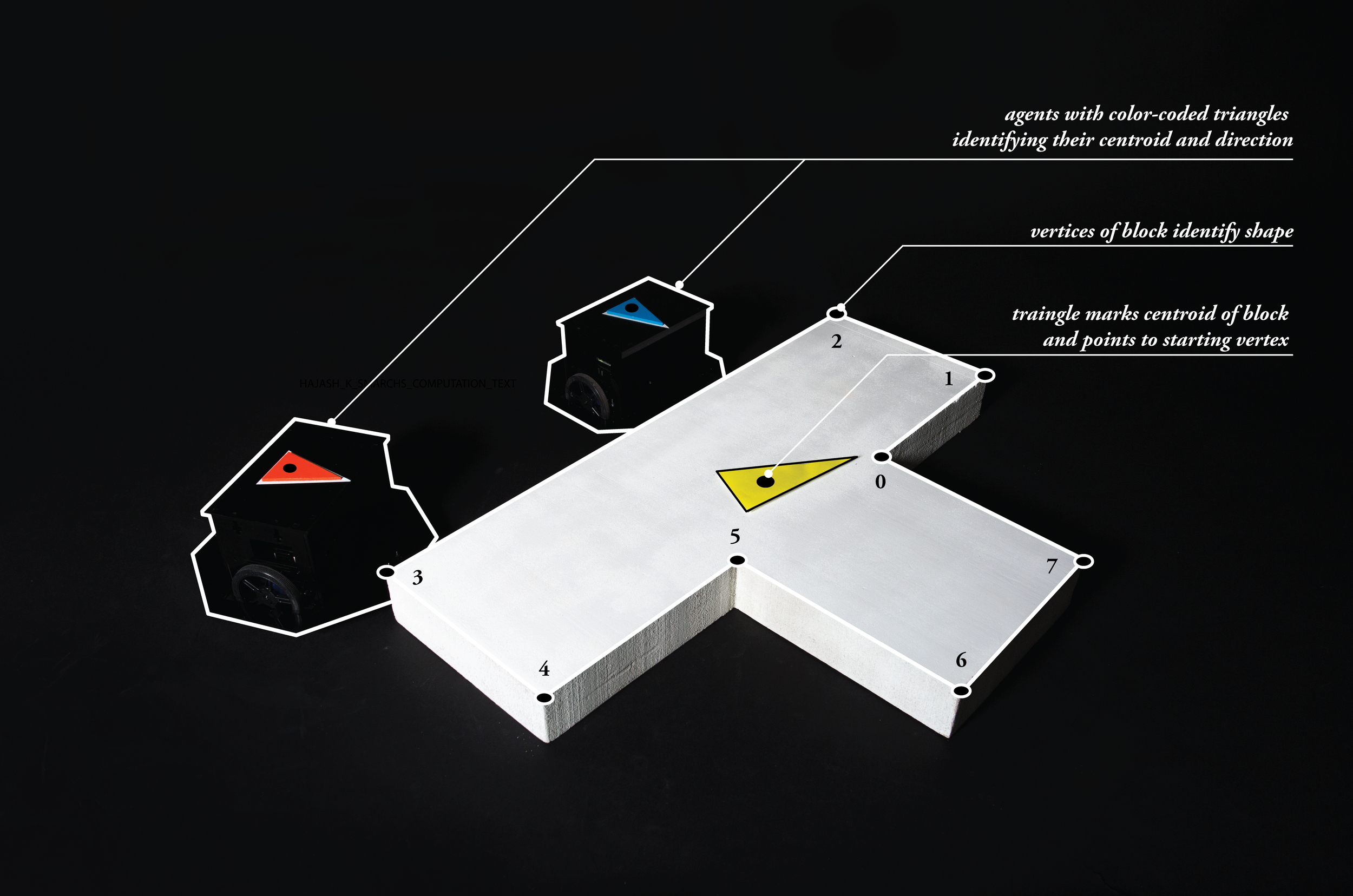

In the physical setup, I used an overhead camera to provide observational data. This was connected to a control station that would receive the observation and follow the learned policy, sending instructions to the mobile robots in the work area. I used OpenCV with color and edge detection, to extract important observational data from each frame. I used isosceles triangles to track the direction and rotation angle. To keep track of the block’s vertices, I rotated the triangle towards the starting point and resorted the list based on this point.

I redesigned the robots, using black acrylic to blend in with the black background. I enclosed the hardware to hide the wires from the camera’s view.

This video shows observational data extracted from the overhead camera. In this demonstration, the robot is manually controlled from the computer with the actions displayed on the top left of the screen. The system is able to track the robot’s position and rotation angle, the block’s position, the block’s vertices in a specified order, and whether the block is in its final goal position or not. This provides a foundation for my future work to transfer learning from simulation to real world.

7

MOVE TO DECENTRALIZED APPROACH

Lastly, I implemented this environment in a decentralized approach using Multi-Agent Deep Deterministic Policy Gradient (MADDPG) as the learning algorithm (Lowe et al., 2018). Following the structure of OpenAI’s Multi-Agent Particle Environments, I built a new environment where each agent has its own observation and reward. I promoted collaborative behavior by using a shared reward structure and by giving the agents a higher reward when they worked together to move the block instead of as individuals.

This decentralized implementation still needs further refinement and testing, but this video shows some of the interesting behavior that emerged. In this training session, the agents learned to slam into the block at extremely high speeds. Sometimes the agent would hit the block just right to push it to the goal, but other times the agent would pin the block against the wall or just bounce around the room.

ANALYSIS + CONCLUSION

Through this research, I developed numerous environments, from single to multiagent with both centralized and decentralized control. After running into significant difficulty with the non-holonomic control, I realized how the agent’s control system could constrain the agent’s ability to explore and therefore find solutions to the task. I decided to revisit the holonomic environment with centralized control and run a couple quick experiments with a few tweaks to the environment. Because my goal is to evoke collaborative behavior, I decided to make the block larger and heavier so that it is very difficult for an agent to move alone. I also dramatically increased the number of agents to five and ten. With ten, it is easy to see how too many players in a collaborative setting can get out of hand. They are all getting in each other’s way. In another episode, their behavior turned mechanical, as if they learned that by acting as gears, they could move the block better than their strength alone. Five robots appeared to be a great combination for collaboration. The team was large enough that they could move the block together, but not too large that they would get in each other’s way. This quick experiment showed some promise for a new direction moving forward.

I used the three phases of collaboration to evaluate my work. The preconditions are the environmental settings that make collaboration possible. These include the agent’s action space (holonomic vs. non-holonomic), the size and density of the blocks, how difficult it is for the agent to move and control a block alone, and the reward function (shared vs. individual). The process is what I am most interesting in–the types of collaborative behaviors the agents learn to manipulate the block. Last is the outcomes; this includes both successfully pushing the block in place and unsuccessful actions such as moving the block around or just moving in circles.

Twisting

Guiding

Pinching

Overall, the agents learned the most successful and interesting policy in the holonomic control environment. Some of these behaviors include twisting or rotating the block, having one agent pushing the block and one provided guidance, and pinching the block between them as they move. In this research, I defined a framework for training the agents and a goal for them to accomplish. I designed, built, and programmed two iterations of physical robots. I developed numerous variations of simulation environments for both single and multiple agents, evaluated RL algorithms and selected an approach, and established a method for transferring a trained policy to physical robots.

Next steps

This project is only the first step towards collaborative robotic assembly. Through this work, I concluded that, in an RL setting for robotic assembly, holonomic control provides more advantages than non-holonomic control. This is because the non-holonomic control dramatically limits the agent’s ability to randomly explore the state space–extremely important in RL environments. Next steps in this research include: building more decentralized multiagent environments using holonomic control; designing and building new robots with holonomic control using omni-wheels; developing 3D simulation environments; and using domain randomization for sim2real transfer. Other algorithmic approaches include: using curriculum learning to build more complex behaviors; testing discretized action space with PPO; learning from pixels; and using goal-conditioned hierarchical reinforcement learning.

Moving forward, we can expand this research by using the assembly problem as a testbed for elements of communication for collaboration. We can also explore other methods of collaborative behavior through the design of the environment (e.g. different blocks, goals, constraints) and robots (e.g. strength, grippers, vision). Another interesting direction in collaboration is when robots have different skillsets and abilities. Similar to when people come together to collaborate on a project, the robots could have no prior knowledge about the other’s abilities and would need to communicate with each other and negotiate roles. Alone, neither robot would be able to complete the entire task, but by working together and combining their skills they would be able to achieve much more. This is why there is power in collaboration. By creating a flexible system wherein robotic agents can adapt and learn over time, we can advance robotic assembly to operate in more dynamic and changing environments.

The full extent of this research is documented in my thesis, “Learning to Collaborate: Robots Building Together.”

References

Gray, B. (1989). Collaborating: Finding common ground for multiparty problems. Jossey-Bass.

Lillicrap, T. P., Hunt, J. J., Pritzel, A., Heess, N., Erez, T., Tassa, Y., ... Wierstra, D. (2015). Continuous control with deep reinforcement learning. ArXiv:1509.02971 [Cs, Stat]. Retrieved from http://arxiv.org/abs/1509.02971

Lowe, R., Wu, Y., Tamar, A., Harb, J., Abbeel, P., & Mordatch, I. (2017). Multi-Agent Actor-Critic for Mixed Cooperative-Competitive Environments. ArXiv:1706.02275 [Cs]. Retrieved from http://arxiv.org/ abs/1706.02275

Mnih, V., Kavukcuoglu, K., Silver, D., Graves, A., Antonoglou, I., Wierstra, D., & Riedmiller, M. (2013). Playing Atari with Deep Reinforcement Learning. ArXiv:1312.5602 [Cs]. Retrieved from http://arxiv.org/ abs/1312.5602

Schulman, J., Wolski, F., Dhariwal, P., Radford, A., & Klimov, O. (2017). Proximal Policy Optimization Algorithms. ArXiv:1707.06347 [Cs]. Retrieved from http://arxiv.org/abs/1707.06347